|

|

|

The Laboratory for Perceptual Robotics is committed to experimental

research regarding robotics and autonomous, embedded systems. Our

facilities for conducting have been assembled with the help of the

National Science Foundation, DARPA, and the State of Massachusetts.

Major components of the infrastructure are listed below.

|

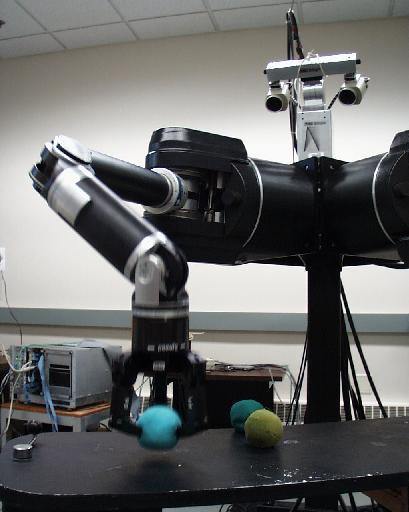

Dexter- the UMass Bi-Manual Dexterity Platform

|

Dexter---the UMass Platform for Studying Bi-Manual Dexterity---consists

of a BiSight stereo head with 4

mechanical and 3 optical degrees of freedom and four microphones

for localizing and interpreting acoustic sources, two whole arm

manipulators (WAMS) are each equipped with a three fingered

Barrett hand with fingertip tactile load cells (ATI). Three VME

cages host the computing system to control the integrated

platform. Research is underway aimed at mechanisms for learning

hierarchical control knowledge - categories of objects,

activities, tasks, and situations - through a continuous

interaction with the environment. We have developed techniques for

exploiting visual and haptic guidance in grasping

and manipulation tasks.

|

|

Mobile Platforms

|

There are a number of mobile platforms being used in the

investigation of distributed robotic sensory systems, including

eight custom designed UMass

uBots and two iRobot ATRV series robots. The UMass uBot is a

small two-wheeled robot equipped with multiple sensors, including

a Cyclovision panoramic camera, eight Sharp GP2D12 IR proximity

detectors, and acoustic microphones. The main processing unit is a

206 MHz StrongArm Computer. A K-Team Kameleon with an Robotics

Extension Board equipped with a 22Mhz Motorola 68376 Processor is

used for motion control. Communication with the uBots is made

through an onboard Orinoco Wavelan card. The ATRV series robots,

manufactured by iRobot Co. are

four-wheeled mobile platforms equipped with sonar sensors and

wireless ethernet communications. LPR currently has an ATRV-Jr. and an ATRV-mini. We have

attached a panoramic camera to the ATRV-Jr, and a Dell Inspiron

8200 laptop for control. All of our mobile platforms are used in

conjunction with the UMass Humanoid Torso and multiple

pan-tilt-zoom cameras located around the lab to create an ubiquitous

shared information network. These distributed resources are

utilized cooperatively to solve multiple, simultaneous perceptual

and motor tasks.

|

|

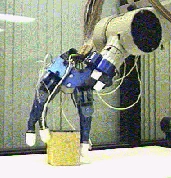

Quadruped Platform

|

Multi-legged locomotion platforms present similar challenges to

those of multi-fingered robot hands when it comes to coordinated

motion planning. Coordinated limb movements must serve both

stability and mobility concerns by sequencing the movement of

several independent kinematic chains. There are active communities

considering gait synthesis for walking platforms and now for

manipulation as well, but so far there has not emerged a unified

framework for solving these problems. So we built

Thing

- a small, twelve degree of freedom, four legged walking robot -

to generalize techniques designed for quasistatic finger gaits

with dexterous robot hands to quasistatic locomotion gaits for

quadruped robots.

|

|

Utah/MIT Hand

|

An integrated hand-arm system employs a GE-P50 robot to carry one

of our two Utah/MIT hands and its actuator pack (click to

enlarge). These hands have 4 fingers, each with 4 DOF actuated

independently using 32 pneumatic actuators and antagonistic

tendons. The compute architecture employs an analog controller for

tendon management and a VME/VxWorks distributed controller. This

platform has been used since 1991 to study collision-free

reaching and

grasping

research.

|

|

Stanford/JPL Hand

|

Another GE-P50 carries our Stanford/JPL hand. This hand was

developed by Ken Salisbury in the early 80's. It is a three

fingered hand with three DOF per finger driven by an (n+1) tendon

scheme. Instrumentation includes tendon tension sensors, motor

position encoders, and Brock fingertip tactile sensors. The

fingertip has a minimum force sensing capacity of 0.01 lbf. The

controller is executed on a VME-based open torque control

architecture. Our research on this platform includes haptic models

of the dynamics in the phase portrait of the grasp formation

process. We have been able to show that optimal policies can be

learned that simultaneously acquire haptic models, estimate

control state, and control grasp formation.

|

|

|

|

|

![]()