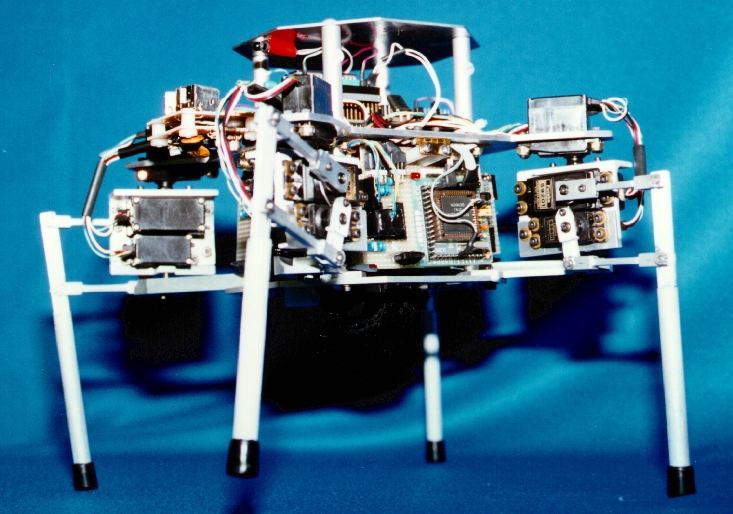

Multi-legged locomotion platforms present similar challenges to those

of multi-fingered robot hands when it comes to coordinated motion

planning. Coordinated limb movements must serve both stability and

mobility concerns by sequencing the movement of several independent

kinematic chains. There are active communities considering gait

synthesis for walking platforms and now for manipulation as well, but

so far there has not emerged a unified framework for solving these

problems. So we built Thing - a small, twelve degree of

freedom, four legged walking robot - to generalize techniques designed

for quasistatic finger gaits with dexterous robot hands to quasistatic

locomotion gaits for quadruped robots.

Incidently, Thing got its name from the disembodied hand of

the same name on the Addam's family - in case you were wondering.

Specifications:

| Height: | 23 cm

| Weight: | 1.6 Kg (2.0 Kg with batteries)

| Material: | Milled Aluminum

| Power:

| Electronics: 125 mA; Motors: 1.5 A

| Batteries:

| Electronics: 5V, 400 MAh Nicads;

| Motors: 5V, 1700 MAh Nicads

| Processors:

| Central: M68HC11F1 (Micros board)

| Legs: (4) M68HC811E2s (CGN modules)

| Memory: | 8K EEPROM, 32K EPROM, 32K RAM

| Code: | C and HC11 Assembly

| Actuators: | (12) Futaba PWM servos

| (models S9201, S9601)

| Sensors: | Joint positions and torques;

| Infrared proximity detectors

| |

|---|

Click on the image to the left to see a movie of Thing

displaying some of its earliest walking gaits.

Click on the image to the left to see a movie of Thing

displaying some of its earliest walking gaits.

We use a distributed control approach to legged locomotion that constructs behavior on-line by activating combinations of reusable feedback control laws drawn from a control basis. Sequences of such controller activations result in flexible, aperiodic step sequences based on local sensory information. Locomotion policies identify a sequence of local objectives and the resources required to observe state and effect actions. Different tasks are achieved by varying the composition of control from the basis controllers rather than by special-purpose control design.

|

|

Design and Implementation of a

Four-Legged Walking Robot

|

|

A Control Basis

For Multilegged Walking

| |

Click on this image to see Thing sense terrain and adjust

its gait by virtue of a closed-loop gait control policy.

Click on this image to see Thing sense terrain and adjust

its gait by virtue of a closed-loop gait control policy.

Thing uses joint torque and position sensors to estimate contact positions and normals and then controls foot placement in a sequence that simultaneously stabilizes the platform and moves over the support surface. You may have to step through the video to see the tactile probing clearly.

| Legged Locomotion over Irregular Terrain using the Control Basis Approach |

The first policy Thing learned was how to rotate in place. Here's a movie showing the training, the number in the lower left of the frame is the elapsed time of the learning episode. The approach we employed made it possible for Thing to learn this gait in about 11 minutes, on-line, in a single training episode. Knowledge of the motor synergies involved in rotating under varying conditions significantly improved the acquisition of other behavior. For instance, Thing learned to translated in roughly half the time given the prior rotate policy than it did without it, and ultimately, the average amount of translation per action was roughly double as well. We believe that this phenomenon is consistent with the kind of staged, sequential development that human neonates exhibit as observed by Developmental Psychologists like Piaget.

Policies built using this framework for perceiving state and executing

control are very robust to changing conditions. The picture at right

shows Thing lying on its back and manipulating a spherical

globe using the rotate walking gait that was acquired on a flat

terrain (sorry about the picture quality). Check out these videos of

our four fingered Utah/MIT hand using the same policy to

rotate a cylinder (soda can) and

screw in a lightbulbs.

Policies built using this framework for perceiving state and executing

control are very robust to changing conditions. The picture at right

shows Thing lying on its back and manipulating a spherical

globe using the rotate walking gait that was acquired on a flat

terrain (sorry about the picture quality). Check out these videos of

our four fingered Utah/MIT hand using the same policy to

rotate a cylinder (soda can) and

screw in a lightbulbs.

In the following demonstration, Thing knows its start position (leftmost X), a relative displacement to the goal (rightmost X), and has no knowledge of the intervening obstacle. He uses his forward-looking IR proximity detectors to observe obstacles enroute and maps them into his evolving configuration space (top row). Thing continuously replans paths to the goal using harmonic functions while updating the configuration space obstacle map as it acquires information about the world. The locomotion plan follows a streamline in harmonic function by selecting one of two temporally extended actions (ROTATE-GAIT, or TRANSLATE-GAIT) in a 4 state finite state automaton. The state is derived from a 2 bit "interaction-based" state descriptor. One bit describes the convergence status of the ROTATE-GAIT action, and the other describes the convergence status of the TRANSLATE-GAIT action with respect to the current path plan. The control knowledge represented in these two actions and this FSA are adequate for finding paths in any cluttered world provided that a path exists at the resolution of our configuration space map.

In about 20 minutes, Thing traveled approximately 5 meters and accrued a translational odometry error of approximately 5 cm. You can see an accelerated movie of this experiment by clicking here.

![]()

Copyright Laboratory for Perceptual Robotics.